目录

1. 自签 ApiServer SSL 证书

K8S 集群中所有资源的访问和变更都是通过 kube-apiserver 的 REST API 来实现的,首先在 master 节点上部署 kube-apiserver 组件。

我们首先为 apiserver 签发一套SSL证书,过程与 etcd 自签SSL证书类似。通过如下命令创建几个目录,ssl 用于存放自签证书,cfg 用于存放配置文件,bin 用于存放执行程序,logs 存放日志文件。

bashcd /

mkdir -p /k8s/kubernetes/{ssl,cfg,bin,logs}

cd /k8s/kubernetes/ssl

① 创建 CA 配置文件:ca-config.json

bashcat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

② 创建 CA 证书签名请求文件:ca-csr.json

bashcat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "kubernetes",

"OU": "System"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

③ 生成 CA 证书和私钥

bashcfssl gencert -initca ca-csr.json | cfssljson -bare ca

④ 创建证书签名请求文件:kubernetes-csr.json

bashcat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.0.0.1",

"10.1.1.140",

"10.1.1.141",

"10.1.1.142",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "kubernetes",

"OU": "System"

}

]

}

EOF

- 说明

- hosts:指定会直接访问 apiserver 的IP列表,一般需指定 etcd 集群、kubernetes master 集群的主机 IP 和 kubernetes 服务的服务 IP,Node 的IP一般不需要加入。

⑤ 为 kubernetes 生成证书和私钥

bashcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

2、部署 kube-apiserver 组件

① 下载二进制包 通过 kubernetes Github 下载安装用的二进制包,我这里使用 v1.16.2 版本,server 二进制包已经包含了 master、node 上的各个组件,下载 server 二进制包即可。 将下载好的 kubernetes-v1.16.2-server-linux-amd64.tar.gz 上传到 /usr/local/src下,并解压。 先将 master 节点上部署的组件拷贝到 /k8s/kubernetes/bin 目录下:

bashcp -p /usr/local/src/kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler} /k8s/kubernetes/bin/

cp -p /usr/local/src/kubernetes/server/bin/kubectl /usr/local/bin/

② 创建 Node 令牌文件:token.csv Master apiserver 启用 TLS 认证后,Node节点 kubelet 组件想要加入集群,必须使用CA签发的有效证书才能与apiserver通信,当Node节点很多时,签署证书是一件很繁琐的事情,因此有了 TLS Bootstrap 机制,kubelet 会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。因此先为 apiserver 生成一个令牌文件,令牌之后会在 Node 中用到。

生成 token,一个随机字符串,可使用如下命令生成 token:apiserver 配置的 token 必须与 Node 节点 bootstrap.kubeconfig 配置保持一致。

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

创建 token.csv

bashcat > /k8s/kubernetes/cfg/token.csv <<'EOF'

bfa3cb7f6f21f87e5c0e5f25e6cfedad,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

③ 创建 kube-apiserver 配置文件:kube-apiserver.conf

bashKUBE_APISERVER_OPTS="--etcd-servers=https://10.1.1.140:2379,https://10.1.1.141:2379,https://10.1.1.142:2379 \

--bind-address=10.1.1.142 \

--secure-port=6443 \

--advertise-address=10.1.1.142 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--service-node-port-range=30000-32767 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/k8s/kubernetes/cfg/token.csv \

--kubelet-client-certificate=/k8s/kubernetes/ssl/kubernetes.pem \

--kubelet-client-key=/k8s/kubernetes/ssl/kubernetes-key.pem \

--tls-cert-file=/k8s/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/k8s/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/k8s/kubernetes/ssl/ca.pem \

--service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/k8s/etcd/ssl/ca.pem \

--etcd-certfile=/k8s/etcd/ssl/etcd.pem \

--etcd-keyfile=/k8s/etcd/ssl/etcd-key.pem \

--v=2 \

--logtostderr=false \

--log-dir=/k8s/kubernetes/logs \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/k8s/kubernetes/logs/k8s-audit.log"

- 说明

- --etcd-servers:etcd 集群地址

- --bind-address:apiserver 监听的地址,一般配主机IP

- --secure-port:监听的端口

- --advertise-address:集群通告地址,其它Node节点通过这个地址连接 apiserver,不配置则使用 --bind-address

- --service-cluster-ip-range:Service 的 虚拟IP范围,以CIDR格式标识,该IP范围不能与物理机的真实IP段有重合。

- --service-node-port-range:Service 可映射的物理机端口范围,默认30000-32767

- --admission-control:集群的准入控制设置,各控制模块以插件的形式依次生效,启用RBAC授权和节点自管理

- --authorization-mode:授权模式,包括:AlwaysAllow,AlwaysDeny,ABAC(基于属性的访问控制),Webhook,RBAC(基于角色的访问控制),Node(专门授权由 kubelet 发出的API请求)。(默认值"AlwaysAllow")。

- --enable-bootstrap-token-auth:启用TLS bootstrap功能

- --token-auth-file:这个文件将被用于通过令牌认证来保护API服务的安全端口。

- --v:指定日志级别,0~8,越大日志越详细

④ 创建 apiserver 服务:kube-apiserver.service

bashcat > /usr/lib/systemd/system/kube-apiserver.service <<'EOF'

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/k8s/kubernetes/cfg/kube-apiserver.conf

ExecStart=/k8s/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

⑤ 启动 kube-apiserver 组件

bashsystemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

systemctl status kube-apiserver.service

#查看状态

⑥ 将 kubelet-bootstrap 用户绑定到系统集群角色,之后便于 Node 使用token请求证书

bashkubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap

3、部署 kube-controller-manager 组件

① 创建 kube-controller-manager 配置文件:kube-controller-manager.conf

bashcat > /k8s/kubernetes/cfg/kube-controller-manager.conf <<'EOF'

KUBE_CONTROLLER_MANAGER_OPTS="--leader-elect=true \

--master=127.0.0.1:8080 \

--address=127.0.0.1 \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem \

--root-ca-file=/k8s/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/k8s/kubernetes/ssl/ca-key.pem \

--experimental-cluster-signing-duration=87600h0m0s \

--v=2 \

--logtostderr=false \

--log-dir=/k8s/kubernetes/logs"

EOF

② 创建 kube-controller-manager 服务:kube-controller-manager.service

bashcat > /usr/lib/systemd/system/kube-controller-manager.service <<'EOF'

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=/k8s/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/k8s/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

③ 启动 kube-controller-manager 组件 启动组件

bashsystemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

#查看状态

systemctl status kube-controller-manager.service

4、部署 kube-scheduler 组件

① 创建 kube-scheduler 配置文件:kube-scheduler.conf

bashcat > /k8s/kubernetes/cfg/kube-scheduler.conf <<'EOF'

KUBE_SCHEDULER_OPTS="--leader-elect=true \

--master=127.0.0.1:8080 \

--address=127.0.0.1 \

--v=2 \

--logtostderr=false \

--log-dir=/k8s/kubernetes/logs"

EOF

② 创建 kube-scheduler 服务:kube-scheduler.service

bashcat > /usr/lib/systemd/system/kube-scheduler.service <<'EOF'

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=/k8s/kubernetes/cfg/kube-scheduler.conf

ExecStart=/k8s/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

③ 启动 kube-scheduler 组件

bashsystemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

#查看状态

systemctl status kube-scheduler.service

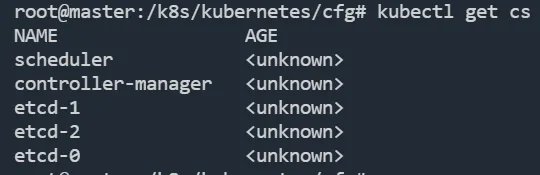

kubectl get cs

#查看集群状态

5. 部署Node组件

需要docker前置,参考Docker官网流程安装:官方流程

① 创建 Node 节点的证书签名请求文件:kube-proxy-csr.json 首先在 master节点上ssl文件夹中,通过颁发的 CA 证书先创建好 Node 节点要使用的证书,先创建证书签名请求文件:kube-proxy-csr.json:

bashcat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "kubernetes",

"OU": "System"

}

]

}

EOF

# 为 kube-proxy 生成证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

② node 节点创建工作目录

bashmkdir -p /k8s/kubernetes/{bin,cfg,logs,ssl}

将 master 节点的文件拷贝到 node 节点

bashscp -r /usr/local/src/kubernetes/server/bin/{kubelet,kube-proxy} root@node1:/k8s/kubernetes/bin/

# 将证书拷贝到 k8s-node-1 节点上

scp -r /k8s/kubernetes/ssl/{ca.pem,kube-proxy.pem,kube-proxy-key.pem} root@node1:/k8s/kubernetes/ssl/

③ 安装 kubelet 在node节点创建请求证书的配置文件:bootstrap.kubeconfig。bootstrap.kubeconfig 将用于向 apiserver 请求证书,apiserver 会验证 token、证书 是否有效,验证通过则自动颁发证书。

bashcat > /k8s/kubernetes/cfg/bootstrap.kubeconfig <<'EOF'

apiVersion: v1

clusters:

- cluster:

certificate-authority: /k8s/kubernetes/ssl/ca.pem

server: https://10.1.1.142:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubelet-bootstrap

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: bfa3cb7f6f21f87e5c0e5f25e6cfedad

EOF

- 说明

- certificate-authority:CA 证书

- server:master 地址

- token:master 上 token.csv 中配置的 token

创建 kubelet 配置文件:kubelet-config.yml 为了安全性,kubelet 禁止匿名访问,必须授权才可以,通过 kubelet-config.yml 授权 apiserver 访问 kubelet。

bashcat > /k8s/kubernetes/cfg/kubelet-config.yml <<'EOF'

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /k8s/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthroizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 100000

maxPods: 110

EOF

- 说明

- address:kubelet 监听地址

- port:kubelet 的端口

- cgroupDriver:cgroup 驱动,与 docker 的 cgroup 驱动一致

- authentication:访问 kubelet 的授权信息

- authorization:认证相关信息

- evictionHard:垃圾回收策略

- maxPods:最大pod数

创建 kubelet 服务配置文件:kubelet.conf

bashcat > /k8s/kubernetes/cfg/kubelet.conf <<'EOF'

KUBELET_OPTS="--hostname-override=node1 \

--network-plugin=cni \

--cni-bin-dir=/opt/cni/bin \

--cni-conf-dir=/etc/cni/net.d \

--cgroups-per-qos=false \

--enforce-node-allocatable="" \

--kubeconfig=/k8s/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/k8s/kubernetes/cfg/bootstrap.kubeconfig \

--config=/k8s/kubernetes/cfg/kubelet-config.yml \

--cert-dir=/k8s/kubernetes/ssl \

--pod-infra-container-image=kubernetes/pause:latest \

--v=2 \

--logtostderr=false \

--log-dir=/k8s/kubernetes/logs"

EOF

- 说明

- --hostname-override:当前节点注册到K8S中显示的名称,需要填写主机名 hostname 后续解析时会使用到

- --network-plugin:启用 CNI 网络插件

- --cni-bin-dir:CNI 插件可执行文件位置,默认在 /opt/cni/bin 下

- --cni-conf-dir:CNI 插件配置文件位置,默认在 /etc/cni/net.d 下

- --cgroups-per-qos:必须加上这个参数和--enforce-node-allocatable,否则报错 [Failed to start ContainerManager failed to initialize top level QOS containers.......]

- --kubeconfig:会自动生成 kubelet.kubeconfig,用于连接 apiserver

- --bootstrap-kubeconfig:指定 bootstrap.kubeconfig 文件

- --config:kubelet 配置文件

- --cert-dir:证书目录

- --pod-infra-container-image:管理Pod网络的镜像,基础的 Pause 容器,默认是 k8s.gcr.io/pause:3.1

创建 kubelet 服务:kubelet.service

bashcat > /usr/lib/systemd/system/kubelet.service <<'EOF'

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Before=docker.service

[Service]

EnvironmentFile=/k8s/kubernetes/cfg/kubelet.conf

ExecStart=/k8s/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

启动 kubelet

bashsystemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

master 给 node 授权 kubelet 启动后,还没加入到集群中,会向 apiserver 请求证书,需手动在 k8s-master-1 上对 node 授权。

通过kubectl get csr命令查看是否有新的客户端请求颁发证书

给客户端颁发证书,允许客户端加入集群:

bashkubectl certificate approve node-csr-FoPLmv3Sr2XcYvNAineE6RpdARf2eKQzJsQyfhk-xf8

#后面跟 上面 kubectl get csr 里面列出的node名

#如果不tab不补全命令 可以使用

source <(kubectl completion bash)

#重启失效 可写入 .bash_profile

6. 安装 kube-proxy

① 在node节点创建 kube-proxy 连接 apiserver 的配置文件:kube-proxy.kubeconfig

bashcat > /k8s/kubernetes/cfg/kube-proxy.kubeconfig <<'EOF'

apiVersion: v1

clusters:

- cluster:

certificate-authority: /k8s/kubernetes/ssl/ca.pem

server: https://10.1.1.142:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

client-certificate: /k8s/kubernetes/ssl/kube-proxy.pem

client-key: /k8s/kubernetes/ssl/kube-proxy-key.pem

EOF

② 创建 kube-proxy 配置文件:kube-proxy-config.yml

bashcat > /k8s/kubernetes/cfg/kube-proxy-config.yml <<'EOF'

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

address: 0.0.0.0

metrisBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /k8s/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: node1

clusterCIDR: 10.0.0.0/24

mode: ipvs

ipvs:

scheduler: "rr"

iptables:

masqueradeAll: true

EOF

③ 创建 kube-proxy 配置文件:kube-proxy.conf

bashcat > /k8s/kubernetes/cfg/kube-proxy.conf <<'EOF'

KUBE_PROXY_OPTS="--config=/k8s/kubernetes/cfg/kube-proxy-config.yml \

--v=2 \

--logtostderr=false \

--log-dir=/k8s/kubernetes/logs"

EOF

④ 创建 kube-proxy 服务:kube-proxy.service

bashcat > /usr/lib/systemd/system/kube-proxy.service <<'EOF'

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/k8s/kubernetes/cfg/kube-proxy.conf

ExecStart=/k8s/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

⑤ 启动 kube-proxy

bashsystemctl daemon-reload

systemctl start kube-proxy

systemctl enable kube-proxy

随后将node2也按照流程走一遍就行了,记得修改名称。

7. 部署K8S容器集群网络(Flannel)

① K8S 集群网络

Kubernetes 项目并没有使用 Docker 的网络模型,kubernetes 是通过一个 CNI 接口维护一个单独的网桥来代替 docker0,这个网桥默认叫 cni0。 CNI(Container Network Interface)是CNCF旗下的一个项目,由一组用于配置 Linux 容器的网络接口的规范和库组成,同时还包含了一些插件。CNI仅关心容器创建时的网络分配,和当容器被删除时释放网络资源。 Flannel 是 CNI 的一个插件,可以看做是 CNI 接口的一种实现。Flannel 是针对 Kubernetes 设计的一个网络规划服务,它的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址,并让属于不同节点上的容器能够直接通过内网IP通信。

② 创建 CNI 工作目录

通过给 kubelet 传递 --network-plugin=cni 命令行选项来启用 CNI 插件。 kubelet 从 --cni-conf-dir (默认是 /etc/cni/net.d)读取配置文件并使用该文件中的 CNI 配置来设置每个 pod 的网络。CNI 配置文件必须与 CNI 规约匹配,并且配置引用的任何所需的 CNI 插件都必须存在于 --cni-bin-dir(默认是 /opt/cni/bin)指定的目录。 由于前面部署 kubelet 服务时,指定了 --cni-conf-dir=/etc/cni/net.d,--cni-bin-dir=/opt/cni/bin,因此首先在node节点上创建这两个目录:

bashmkdir -p /opt/cni/bin /etc/cni/net.d

③ 装 CNI 插件

可以从 github 上下载 CNI 插件:下载 。

解压到 /opt/cni/bin:

bashtar zxf cni-plugins-linux-amd64-v0.8.2.tgz -C /opt/cni/bin/

④ 部署 Flannel 可通过此地址下载 flannel 配置文件:下载 kube-flannel.yml 注意如下配置:Network 的地址需与 kube-controller-manager.conf 中的 --cluster-cidr=10.244.0.0/16 保持一致。

在 master 节点上部署 Flannel:

bashkubectl apply -f kube-flannel.yml

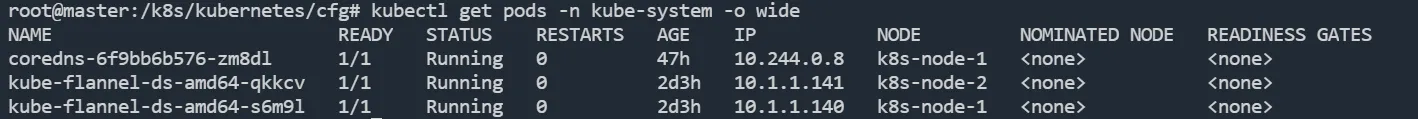

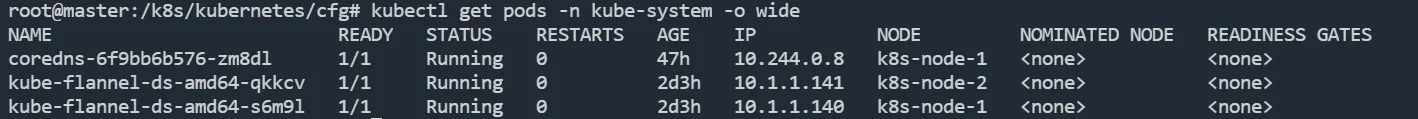

⑤ 检查部署状态 Flannel 会在 Node 上起一个 Flannel 的 Pod,可以查看 pod 的状态看 flannel 是否启动成功:

bashkubectl get pods -n kube-system -o wide

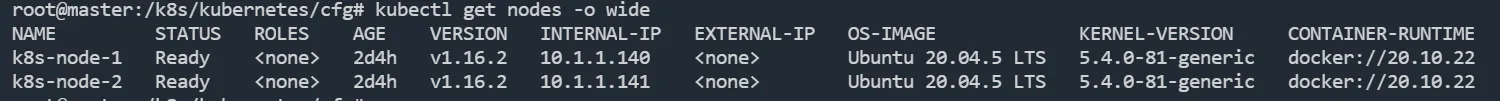

Flannel 部署成功后,就可以看 Node 是否就绪:

需要就绪 是需要在master上部署完成后将两个node中/opt/cni/bin/都补上

需要就绪 是需要在master上部署完成后将两个node中/opt/cni/bin/都补上

8. 部署内部 DNS 服务

上面node和master还有cni网络部署完成后还有一个问题,就是如果现在拉起一个容器的话会发现,在容器内无法和外网通信,这个时候就需要部署内部DNS完成解析。 在Kubernetes集群推荐使用Service Name作为服务的访问地址,因此需要一个Kubernetes集群范围的DNS服务实现从Service Name到Cluster IP的解析,这就是Kubernetes基于DNS的服务发现功能。

① 部署 CoreDNS CoreDNS配置文件 coredns.yaml 注意 clusterIP 一定要与 kube-config.yaml 中的 clusterDNS 保持一致

yamlapiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

image: k8s.gcr.io/coredns:1.6.7

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 160Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

部署 CoreDNS:

bashkubectl apply -f coredns.yaml

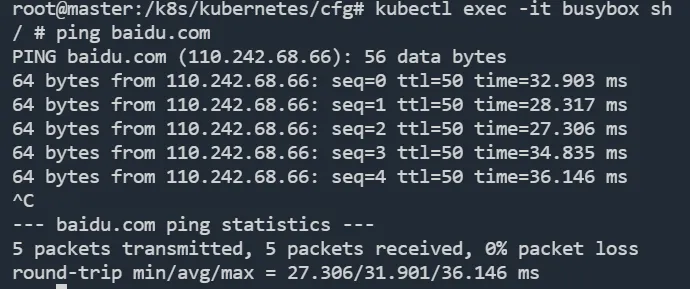

现在打开任意一个容器以后进行ping操作就可以对外网进行访问了。

CoreDNS部署时要注意,有可能会报错

plugin/loop: Loop (127.0.0.1:55710 -> :53) detected for zone ".", see https://coredns.io/plugins/loop#troubleshooting. Query: "HINFO 6229007223346367857.5322622110587761536."

是因为系统dns中包含 127.0.x.x导致的,这个时候需要去 /etc/resolv.conf中修改掉,改成任意nameserver就行了!